FIMI Through the Looking-Glass

Main tactics observed during the Finnish Election - Tuvalu Election provides another case of Chinese interference - How does FIMI look in the 'Global Majority'? - A word on GenAI & infrastructure.

Hey there.

This newsletter always seems a bit too short to encompass all the lessons learned from the past days on foreign information manipulation and interference (FIMI) threats targeting elections. There is so much happening again, and many thoughts I want to share with you in the hope that they will be useful in your daily tasks. I apologize in advance if I am not able to cover everything, but the press corner is usually there to give a broader view of the ongoing media conversation.

I am also starting to receive feedback and insights from you guys. Thank you so much; it really means a lot. Feel free to continue sharing this newsletter, and please let me know about your work and projects ahead of elections.

Finnish Presidential Election: Navigating the Window of Opportunity as Candidates Head to a Run-off

Last Sunday, the Finnish presidential election took place, but no candidate was able to secure a sufficient majority, leading to a run-off in two weeks on February 11. Two candidates are facing each other: the former Prime Minister Alexander Stubb (centre-right National Coalition Party) and the former Foreign Minister Pekka Haavisto (Liberal Green Party), who received 27 and 26 percent of the votes, respectively, in the first round. Jussi Halla-aho, the former leader of the Finns populist party, came in third with 18 percent of the votes.

Meanwhile, no particular explosive case of election interference has been revealed at this stage by the Finnish government of civil society actors. Nonetheless, some tactics disclosed by reports include:

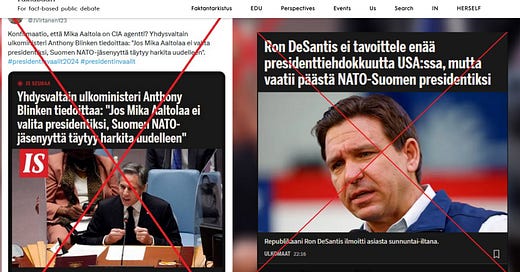

impersonation of the Ilta-Sanomat newspaper on Twitter, by creating and publishing manipulated screenshots of news headlines, aimed to discredit candidates closely aligned with the US. The meta-narrative being spread here is that the US is interfering in Finnish elections, using NATO’s membership as a threat and portraying candidate Mika Aaltola as a CIA’s agent.

According to FaktaBaari, the Finnish fact-check organisation, manipulated screenshots of news headlines were debunked in the previous year. This tactic has also been observed in previous Russian operations affiliated with the Internet Research Agency (IRA) as well as with Secondary Infektion. For example, as early as 2014, actors linked to the IRA attempted to convince residents in the town of St. Mary Parish in Louisiana, USA, that they faced a health threat linked to an alleged disaster at a local chemical plant. To support this claim, they created a false screenshot of CNN’s homepage showing the alleged disaster and portraying it as a national-level matter. They also created spoofed websites of Louisiana TV station and newspaper to establish legitimacy and leave no doubt among the targeted audience. This incident reminds us of 2022 Doppelganger/RRN campaign, detected by several actors including EU DisinfoLab, the ISD, the DFRLab, Viginum and Meta.

discreditation of traditional media and state actors, by disseminating and amplifying a conspiracy theory on Twitter, aimed to create distrust towards the election process. Domestic actors aligned with the far-right party have spread the narrative that there is a coordinated effort by the public broadcaster Yle to undermine support for candidate Jussi Halla-aho and ultimately interfere in the election process. This narrative is supported by the claim that the broadcaster has suppressed polling that would have revealed an increase in support for the candidate.

deceptive offline advertisement aimed to discredit adversary candidates. False campaign posters portraying candidates Jussi Halla-aho and Pekka Haavisto have been seen in Helsinki. The campaign posters appear to mimic authentic ones but attributed false quotes to the candidates. These posters are also using defamatory language such as “Fascist Halla-aho”. While this tactic is not online, it is worth mentioning, as we know from previously disclosed campaigns that threat actors such as Russia, can create offline assets to serve their narratives online. Here’s an example of this modus operandi targeting France last November.

As the two last candidates head to the run-off, is there a higher risk that potentially ongoing foreign information manipulation activities will intensify? Is there a window of opportunity coming in the next weeks? To assess this risk, we need to identify potential motivations and incentives for threat actors.

First, the president’s job in Finland holds significant importance as the President has the constitutional lead on foreign affairs outside the EU and is the commander-in-chief of the Finnish military army. These competencies make candidates and their parties primary targets for threat actors, such as Russia and China, either to support their campaign or to discredit them based on their ideological, foreign policy, and economic interest.

According to Henri Vanhanen, research fellow at the Finnish Institute of International Affairs, quoted in this Financial Times article: “Both candidates are supporters of liberal democracy and agree on the fundamentals of Finland’s foreign and security policy. The differences are rather in style and emphasis as Stubb is a strong trans-Atlanticist favouring the EU and Nato, and Haavisto on the other hand is known for peace-mediation, environmental activism and UN-related duties”, said Henri Vanhanen, research fellow at the Finnish Institute of International Affairs”.

It seems that neither candidate is aligned with Russia’s interests. Pekka Haavisto could be the less favored of the two, given his role in enabling Finland’s accession to NATO. His views on environmental issues and the UN may also not align with how Russia perceives these matters.

As for China, it is challenging to identify a clearly favored candidate. Both appear to acknowledge China’s role in global geopolitics. However, according to Helsinki Times’ scenarii, Alexander Stubb seems to be the less preferred candidate, as he has indicated support for the United-States’ policies concerning China’s economic and technological ascent.

In this context, threat actors such as Russia and China may also choose a third way, continuously increasing polarization within Finnish society and exploiting existing fears and vulnerabilities, such as a dismal economy, labor market tensions and foreign policy issues. For instance, Russia decided to unilaterally terminate a Finnish-Russian border agreement a few days before the election to weaponize migrants and exploit internal tensions on this issue. The use of polarization tactics and ongoing hybrid warfare can, in the long run, create distrust in the democratic institutions and undermine the leadership of the new President.

No matter what happens, we also know that watchdogs are out there, such as CheckFirst and Faktabaari, who launched the CrossOver Finland project in September 2023 to study how false and misleading information may be spread on eight platforms, including Google’s search recommendations, Facebook, Reddit, YouTube and TikTok. This joint project aims to understand the relationship between algorithms and the content recommended to Finns on multiple social media platforms. They have created dashboards available here: CrossOver Dashboards. Try them out!

As Finns seem to be increasingly wary of disinformation according to a new study by the DECA research consortium and the University of Turku, we have hope that they will be able to detect and respond to potential FIMI threats.

Tuvalu Election: China’s Cognitive Warfare Continues in the Pacific Region

Last weekend marked an election moment for Tuvalu, a small Pacific island nation that rarely garners attention in Western media. So, why does the Tuvalu legislative election matter this time?

Tuvalu has long been torn between Australian influence and Chinese influence. Both actors see Tuvalu, and other Pacific island nations, as strategic allies in the Pacific to counterbalance each other’s influence. Additionally, Tuvalu remains one of the 12 diplomatic allies of Taiwan. Prime Minister Kausea Natano, who has been supportive of Taiwan, pledged to maintain ties with Taipei. However, he has been defeated last Friday by his rival Seve Paeniu, who has made claims that he will review the country’s ties with Taipei.

Therefore, in the aftermath of the Taiwanese election, with Chinese cognitive warfare persisting, the Tuvalu election represents an opportunity to diminish Taiwanese supporters and increase Chinese influence in the Pacific. This opportunity has been confirmed by recent reports, describing the following tactic:

The PRC’s broadcaster CGTN has targeted a domestic news outlet, Tuvalu Broadcasting Corp, sending an email to recruit one of their senior reporter to develop an inauthentic news article. Instructions are included in the email: write an 800-word opinion article “on Tuvalu election and it’s potential to cut ties with Taiwan.” A payment of 450 dollars is offered for publishing the article.

This tactic is reminiscent of the general Chinese playbook to recruit local actors, through financial transactions or partnerships, to spread PRC’s narratives in the targeted country. This narrative also plays on local existing debates to shape the discussion according to the PRC’s interests.

Coming Next: Elections in the ‘Global Majority’ - The Case of South-East Asia

In the next few days, several elections are scheduled to take place across the globe:

Costa Rica and El Salvador on February 4th,

Azerbaijan on February 7th,

Pakistan on February 8th.

Perhaps you have already considered great investigative projects in the field of threat intelligence to monitor potential foreign information manipulation and interference targeting these countries. But you may only know Azerbaijan and Pakistan as threat actors targeting other countries such as France or India.

Perhaps you have tried drafting or even implementing Trust & Safety policies for El Salvador election, based on common standards developed mostly in the U.S., for the U.S. based on the U.S. experience with online electoral interference. But El Salvador President Bukele has his own army of trolls, who may simply disregard your policies and launch a harassment and defamation campaign after you.

Perhaps you have realized that reporting about Costa Rica won’t be as easy as you thought, because you have no clues about Costa Rica.

Perhaps you just don’t know about the ‘Global Majority’?

…

Last week, I discussed the importance of frameworks as a powerful tool to influence our perception of the information environment and how to develop responses to the perceived threats. As non-Western countries also enter the election cycle, this battle for framing current threats becomes even more significant. Last Tuesday, this topic was highlighted in a talk organized by All Tech Is Human titled ‘Global Majority Spotlight: Elections’. This talk featured three women actively engaged in the Tech and Democracy conversations in South-East Asia: Sabhanaz Rashid Diya (Tech Global Institute), Saritha Irugalbandara (Advocacy Hashtag Generation) and Shmyla Khan (Researcher and Campaigner).

Among many topics, they mentioned their disappointment regarding the Unesco guidelines for the governance of digital platforms. The ‘global majority’ as they refer to it, is not represented in these guidelines. Western standards are being applied to local contexts inefficiently, without considering the ecosystem viewpoint. According to them, it is even by design that the international community does not discuss who is in the room making these guidelines. They call for people to first describe the information environment and the tactics being played there before trying to develop or apply concepts shaped in other contexts.

So, what can we actually say when we talk about foreign information manipulation and interference, for example, in South-East Asia?

In South-East Asia, perhaps we can start by removing the term ‘foreign’. According to Meta’s report in December 2022, 90% of coordinated inauthentic behavior (CIB) operations in the Asia-Pacific were partly or wholly focused on domestic audiences. These operations originate from domestic actors, such as government agencies targeting their own population.

Then, what does information manipulation actually mean for this region and countries like Pakistan, Bangladesh, India or Sri Lanka?

Information manipulation in this context differs from our understanding in the West, where it typically involves distorting context and creating false evidence. In several countries in South-East Asia, the premise of free expression needed for such manipulative tactics does not even exist. If we choose to keep the term ‘Information manipulation’, it primarily refers a major tactic: controlling the state-sponsored narrative through punishing and silencing alternative voices.

To implement this tactic, states in South-East Asia have established laws or amended existing ones over the last years laws to strengthen their ability to repress and censor civil society actors such as journalists and media. Legislation has become a useful tool to justify suppressing voices, both online and offline, by blocking access to social platforms during demonstrations in Pakistan or to independent news media during the latest election in Bangladesh.

What are the effects of this tactic on elections? It prevents the population of these countries from accessing a plurality of information and verifying sources. Additionally, it erodes trust in the election process and can result in lower turnout, as seen in Bangladesh where 72 percent of voters stayed at home, according to the Atlantic Council. This trend is further explained by the demographics of these countries, where a significant portion of the voters are young people, many of whom are voting for the first time.

In Western countries, people strive to dominate narratives across the web, employing all the tools they can access. While it is not a fair battle, at least there is one. In South-East Asia, there is a single top-bottom punitive narrative relying on an arsenal of tools available only to the upper class. Marginalized communities are left with no tools, except boycotting, and they live with fears of reprisals.

While people in South-East Asia do not have significant power within their countries to counteract this authoritarian information environment, their voices are also under-represented in the international community. But they are the ones who can help us framing and countering the hybrid threats targeting their electoral processes.

Your press corner

Here’s the weekly readings to keep you connected to all the conversation on global elections and information operations:

ENQUETE FRANCEINFO. Derrière les tags d'étoiles de David à Paris, un vaste réseau de désinformation russe (francetvinfo.fr) - more details on this Russian campaign targeting France and linked to the broader RRN campaign detected by Viginum, the French Agency to counter foreign digital interference.

Germany unearths pro-Russia disinformation campaign on X | Germany | The Guardian - revelations about another branch of RRN aka Doppelganger, this time targeting Germany.

'Matriochka', la nouvelle campagne de désinformation anti-ukrainienne à destination des médias occidentaux | TV5MONDE - Informations another Russian information operation campaign called ‘Matriochka’, bearing resemblance with RRN/Doppelganger, but aiming this time at bombarding Western media with requests to fact-check information about Ukraine.

Russian 'cyberwar' could exploit divisions in Scotland | The Herald (heraldscotland.com) - the significance of cyberwarfare, as discussed by Ciaran Martin, former chief of GCHQ, the UK’s secret intelligence listening post.

Google and Meta are assisting Russia in conducting information operations against Western Democracies. - Russia Vs World - Alphabet, the parent company of Google, and Meta, owner of Facebook, are under scrutiny for allegedly neglecting U.S. sanction regulations.

Pelosi Suggests Foreign Influence Behind U.S. Pro-Palestinian Activism | TIME - An incendiary news headline.

A subtle opponent: China’s influence operations (globalgovernmentforum.com) - the third episode of the Global Government Forum saga on interference, discussing China’s influence operations.

Unbalanced Information Diet: AI-generated deception / Chinese-Originated Disinformation Spreading on Social Media; AI-Generated Profile Pictures Make Fake Accounts Look Real - The Japan News (yomiuri.co.jp) - The Japanese media outlet Yomiuri is starting to raise awareness about FIMI threats targeting Japan, including Chinese FIMI campaigns.

Seoul’s spy agency accuses China of major cyber attacks (koreaherald.com) - South Korea is also getting angry at China and North Korea.

Leaks and Revelations: A Web of IRGC Networks and Cyber Companies (recordedfuture.com) - Iran’s infrastructure shows a persistent threat.

Georgia may make using AI deepfakes in election campaigns illegal - al.com - Georgia, USA, has made AI deepfakes illegal in election campaigns. Is it enough to deter threat actors?

Ghana ayɛ hu oo! – Cheddar decries AI-manipulated video of his views (ghanaweb.com) - AI-manipulated videos reach Ghana ahead of the country’s election. This time, it was debunked by its victim to raise awareness among the population.

Strengthening the Resilience of Canada's Democracy (newswire.ca) - Canada is always one step ahead when it comes to countering FIMI threats. Lots of resources here such as the Protecting Democracy Toolkit and The Countering Disinformation Guidebook for Public Servants.

Q&A: Taiwan's digital minister on combatting disinformation without censorship - Committee to Protect Journalists (cpj.org) - insights from Taiwan’s digital minister Audrey Tang in an interview with CPJ.

IN FOCUS: Taiwanese came together to combat disinformation - Taipei Times - lessons learnt about teamwork and a ‘whole-of-society response’.

Comparing Tech 2024 Election Efforts (substack.com) - a great summary and analysis by Katie Harbath in her newsletter Anchor Change. I strongly recommend subscribing if you are not already her reader.

Disinformation is often blamed for swaying elections – the research says something else (theconversation.com) - if you feel like you will never be ready before elections, this research’s results can help you relax a bit…

Fakes, forgeries and the meaning of meaning in our post-truth era (ft.com) - … if you were already too relaxed.

Elon Musk Spreads Election Misinformation on X Without Fact Checkers - The New York Times (nytimes.com) - coming back to the question of influencers on social platforms.

MAGA Has TDS—Taylor Derangement Syndrome - by A.B. Stoddard (thebulwark.com) - The Taylor Swift conspiracy theories created by some Republicans to diminish her influence over the American youth continues.

When GenAI Becomes Actually Useful

Since the deepfake Biden robocall event in Hampshire, a lot has been said in the news about the dangers of Generative Artificial Intelligence (GenAI) when leveraged by bad actors. However, rather than being particularly instructive, the headlines have primarily fueled website traffic and generated revenues for news outlets.

Nevertheless, one interesting piece of news has emerged, indicating that the deepfake audios were likely created using technology from voice-cloning startup ElevenLabs. Several tests have been conducted to verify this hypothesis.

This is not the first time that the company developing the technology used in an information operation is revealed. Last September, Newsguard detected ElevenLabs technology in another incident involving conspiracy theories and AI-generated voices, including a clone of former President Barack Obama’s voice. Moreover, in early 2023, Graphika reported about the utilization of GenAI technology provided by the London-based company Synthesia in Chinese operations.

What is the effect of these reports?

It means that GenAI may shift from a perceived threat targeting our electoral processes to a real vulnerability that our counter-FIMI community can exploit. Indeed, threat actors have not excelled over the years at covering their traces. As one OSINT expert I know describes it, their main characteristic is their laziness.

While GenAI may seem an attractive tool to enhance the sophistication of their operations, it also demands more efforts to conceal the technology used, along with potential proxies and financial transactions involved in fabricating and delivering GenAI content.

GenAI in 2024 is an opportunity for us to invest in the Internet infrastructure, track threat actors and make new connections between them following their footsteps on the path of AI-generated content.

Thank you for taking the time to dive into this newsletter and let me know what you thought about it and tips to improve it!