What Happens in the Americas Doesn't Stay in the Americas

Signals of Foreign Interference in Portuguese Election - Murthy v. Missouri Case: a Political Campaign or an Information Manipulation Operation? Snapshot on Upcoming Turkish Local Elections.

Hey there.

In this newsletter, we will explore signals of potential foreign interference in Portuguese Election, thanks to Inês Narciso who joins me today in the first part of this newsletter to give her observations about the recent election.

Then, looking at the ongoing Murthy v. Missouri case, perhaps better known in Europe as the ‘Censorship Industrial Complex’ theory, we will explore the limits of current definitions and interrogate ourselves on what criteria we can use, such as malign intent, to characterize the tactics we are observing.

Then, because local elections can be also important moments, we will take a quick look at what’s happening in the upcoming Turkish local elections.

I hope you will enjoy it!

What to expect:

Latin America Gives a Boost to the Extreme-Right in Portuguese Elections

This is the first direct external contribution to this newsletter by Inês Narciso. Inês is an OSINT expert, volunteer at VOST Europe, who has carried out research at the ISCTE-IUL MediaLab in the area of disinformation and influence operations. She participates in several European and international working groups in these areas. As a former Osint Curious member, she also worked on the construction of the Obsint Guidelines, which attempt to increase ethical commitments and methodological quality to public Osint investigations.

She shares with us today her observations regarding signals of foreign interference efforts, likely from Brazil and Argentina, to promote the far-right party during the latest Portuguese elections.

Why does this matter? As the European Parliament elections come closer, all previous European elections can inform us on how our adversaries might try or are trying to target the European audiences. Here are her insights:

Portuguese elections on the 10th of March were marked by a significant growth in the extreme right, which quadrupled their presence in the Parliament, becoming the third force, with almost 20% of the votes.

Two weeks before the Portuguese came to the polls, news came out of a possible foreign interference operation that targeted the two main centrist parties, Socialist Party (PS) and Social Democratic Party (PSD), with Youtube ads. The ads critiqued current and past policies from both parties, made corruption accusations and questioned PSD’s leader capacity to lead the Portuguese Right.

The case was first reported by MediaLab, a research lab at Lisbon University’s ISCTE, known for identifying influence operations and disinformation campaigns. The research lab found connections to possible influence operations in Panama, Singapore and Romania. In the meantime, at least two newspapers developed research on the topic, having found out about connections to an Argentinian company that was also involved in the Milei campaign, and possibly Bolsonaro’s campaign.

So what do we know so far?

First of all, the three video ads were found with a similar behavior, uploaded by newly created channels on YouTube, and online for approximately 48 hours, in the last week of February. Some screenshots and media preservation by, for example, Bellingcat and by suspicious users made it possible to identify the channels and the paying entity for at least two of the ads: NekoplayLLC. The same modus operandi and design makes it highly probable that the third video was also coming from the same source.

Nekoplay LLC is a Delaware company that has no recent business activity and seems to be closed since August 2023, according to Portuguese newspaper Público. Público used privileged sources to obtain more information on the company, since the US State has a record of keeping this ownership data private. Público discovered that the company was owned by Golden Cat Tech. Inc., a Virgin Islands based company listed in the Panama Papers. The owners of Golden Cat Tech. Inc., are also connected to an Argentinian company called KickAds.

According to a source of the same newspaper, and despite never stating it publicly, KickAds was involved in Javier Milei’s campaign and is already working on the Panama and Dominican Republic elections. Jornal Expresso also developed on the topic, finding some evidence of KickAds involvement in political marketing, a paper trail connecting the owners of KickAds to Nekoplay LLC, and concerns on getting blocked on Google Ads. Expresso also developed further on the influence campaigns of Panama, Singapore and Romania, where Nekoplay LLC had also been identified by users as the paying entity. The newspaper reports on the use of deepfakes, money scams and intimidating content.

The connection to Latin America, and specifically Brazil, is strengthened by the name of one of the channels promoting the content, “Bolsonaristas em Portugal”. The extreme-right wing party Chega, who has manifested support for Milei and Bolsonaro in multiple occasions, has a strong connection on social media to their Latin American counterparts. A recent report by think tank Democracia em Xeque, in Brazil, points out how the leader of Chega was amplified by Brazilian users, profiting from the scale difference between Portugal and Brasil, and getting disproportionate interactions for the Portuguese social media landscape.

As always, attribution and impact assessment are hindered by lack of data. None of the Nekoplay LLC ads appears on the Google Ads library, raising questions to whether deleted data is simply removed, or if YouTube ads are just never integrated, since there are no YouTube ads between the over 400 ads NekoplayLLC displays on the platform. No instances of the videos were found in other social media platforms, besides reporting cases. KickAds has published articles on the potential of WhatsApp as a marketing platform. The messaging platform was extensively used to disseminate content both on Milei and Bolsonaro’s campaigns, but limitations on data access make it difficult to assess if the platform was also used in this case.

Many thanks Inês! It is not often that investigations explore companies related to purchasing and delivering ads on platforms, which makes these observations even more valuable.

Murthy v. Missouri - The Jawboning Narrative

Now turning to the U.S., I would like to discuss today the recent developments regarding the Murthy v. Missouri case, which is known in Europe by other names. Remember the “Censorship Industrial Complex theory”, first reported by Politico journalist Mark Scott? Yup, that’s it.

It is a fine line between influence and interference. Between influence campaigns and information operations. Between disinformation and lies.

It is also a fine line to judge when a narrative is simply leveraged for political gain or when it is actually part of an information manipulation campaign, with malign intent to harm and threaten national and international security.

As - at least to my personal view - this narrative has a potential to harm the international countering FIMI community, as well as threaten years of laborious development of partnerships and trust, I feel it is right to describe the tactics being used here and let the readers judge if it is just a political game or something more, with broader consequences.

But first, a bit of history and explication of U.S. complicated legal terms:

Since 2022, a narrative has been ferociously promoted, claiming that the U.S. government has been censoring public opinion through coercing proxies such as platforms and researchers.

This narrative has been so dominant that it transformed into a lawsuit against the U.S. government. The lawsuit, now named Murthy v. Missouri, followed successive stages, whose details I will spare you with, but the last one was the United States Fifth Circuit Court of Appeals last September. The Fifth Circuit Court of Appeals found that there had been indeed some coercion in the government’s contact with social media companies which violated the First Amendment but narrowed the previous injunction by Judge Doughty to block all communications between government agencies and social platforms.

In October 2023 the Supreme Court agreed to hear oral arguments regarding this case, which took place last Monday, the 18th. The results of this hearing will be announced before the summer to judge whether the efforts of U.S. politicians to influence researchers’ efforts to counter information manipulation activities and social media platforms’ content moderation policies, notably during the Covid-19 pandemic and the 2020 U.S. elections, can be considered as “jawboning”, thus violating the First Amendment.

What do we mean by “jawboning” in U.S. politics?

I like this precise definition from Dean Jackson (Tech Policy Press) in Kate Klonick’s newsletter: “Jawboning” refers to government efforts to coerce speech intermediaries into removing “disfavored” expression, lest the intermediary face some punishment. The classic case from the 1960s, Bantam Books v. Sullivan, involves Rhode Island sending police officers to bookstores and warning that certain materials might be considered obscene by a state board, which could then refer the bookseller to prosecutors.”

Precisely here in 2024, Republican officials in Missouri and Louisiana are accusing government agencies, such as the Centers for Disease Control and Prevention (CDC), the Federal Bureau of Investigation (FBI), the National Institute of Allergy and Infectious Diseases (NIAID), the State Department, and the Cybersecurity and Infrastructure Security Agency (CISA), of “jawboning” social media platforms into removing content during the Covid-19 Pandemic and the 2020 Elections.

Perhaps you might say, this is yet another example of the never-ending debate in the U.S. between advocates of total, unlimited free speech and proponents of moderating speech and protecting consumers online. This narrative might seem like just another political campaign to you. However, there have been disturbing signals that could lead us to suspect an information manipulation campaign.

Murthy V. Missouri - Harmful Effects

When I was working at VIGINUM, the French Agency to Counter Foreign Digital Interference, I think I got a bit traumatized by our decree which framed the way we were looking at information operations. The French decree states that VIGINUM monitors, detects and characterizes “any phenomena that meet the criteria for defining foreign digital interference” which are:

Involvement of foreign actor(s)

Manifestly inaccurate or misleading content

Fabricated amplification

Harm to the French fundamental interests

This means that during the investigation, I was always thinking of the potential harming effect of what I was looking at. Sometimes, an information operation doesn’t hold any direct foreign links. It may also share similar characteristics as an influence campaign. Trying to discern the effects it seeks to cause was and is helpful to characterize the events unfolding online and offline. When we look at the propagation and amplification of the “jawboning” narrative, what are the resulting effects? Can they tell something about malign intent?

Since the ‘censorship’ and ‘jawboning’ narrative started to develop in 2022, effects that have harmed domestic actors have been observed:

Weakening of State - Platforms cooperation: the cooperation with platforms such as Meta seems to have severely decrease. Meta has underlined in its last quarterly report that “while information exchange continues with experts across our industry and civil society, threat sharing by the federal government in the US related to foreign election interference has been paused since July”. The U.S. State Department also canceled its monthly meeting with Meta to discuss 2024 election preparations and hacking threats.

Chill effect: Civil society organizations and academic researchers have been struggling to find fundings to support their efforts to counter information manipulation efforts because of all the subpoenas that have been issued targeting their members. This is critical, especially in an election year. I can personally tell, that moving in the U.S. just last November has made me wonder if I was not completely stupid, as the funding for my activities seemed to be difficult (impossible) to find in the U.S., while many initiatives were being launched in Europe.

This has resulted in a decrease of knowledge and awareness about the foreign information manipulation threat targeting the U.S. and other countries this year. Furthermore, if the result of this lawsuit favors the plaintiffs, it could in the future severely harm the ability of U.S. agencies to flag foreign influence and interference campaigns, thus increasing threat vulnerability.

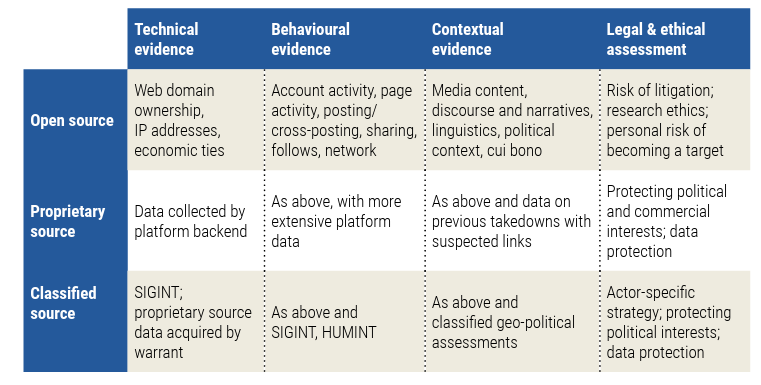

To understand completely the challenge at stake, it is important to revisit NATO Stratcom CoE’s framework for attribution.

There are three categories of sources that can be used to investigate information manipulation activities. Open source (researchers and civil society), Proprietary source (platforms), Classified source (government agencies). They each hold one part of the answer and are blind to at least one other source if they did not put any cooperation mechanism in place.

The first injunction given last July on the case Murthy v. Missouri showed a direct attempt to prevent any collaboration between the three categories of sources, as it forbade many federal departments from communicating with social media companies and research centers, except in very limited cases. Weakening the cooperation between agencies and platforms directly sent us back to ten years ago, before the 2016 Russian interference in U.S. elections.

As we look into potential harms at the international level, this could directly jeopardize international cooperation on this matter. The U.S. is a critical ally in the fight against foreign information manipulation and interference. Blinding the U.S. means blinding the international community.

(Don’t worry I am still French, with a Pavlovian reflex every time ‘De Gaulle’ or ‘champagne’ are mentioned).

Murthy v Missouri - Tactics and Methods

How did we get there? What tactics and methods were used to leverage that narrative and turn it into a weapon with such devastating effects? This NYT piece has related everything. Here’s a summary of the tactics described:

Creating an organisation and related website to develop a conspiracy narrative: in August 2022, a new organization, the Foundation for Freedom Online, posted a report on its website called “Department of Homeland Censorship: How D.H.S. Seized Power Over Online Speech.” It claimed to be aware of a “mass coordinated censorship of the internet” by federal officials.

Obtaining authentic documents and use of fake experts to amplify this narrative: in October 2022, Elon Musk, new owner of Twitter/X obtained internal company communications that he later decided to release to selected journalists. In December 2022, the so-called journalists ‘Matt Taibbi’ and ‘Michael Shellenberger’ released the “Twitter files”, accompanied by conspiracy theories to make their claim heard.

Recruiting partisans and baiting influencers to create an echo chamber: From January 2023, the newly created Select Subcommittee on the Weaponization of the Federal Government created an echo chamber to amplify these conspiracy theories, inviting partisans and influencers to provide further evidence offline and online.

Distorting facts and reframing context: during the Subcommittee as well as during the Murthy v Missouri case, “distorting facts” and “reframing context” were the most used tactics. Claims were made based on distorting evidence given from the leaked emails.

Harassment campaigns: Great researchers (Renée diResta, Nina Jankowicz), trust and safety leaders (Yoel Roth), and many others, all working to mitigate the risks of information manipulation activities, were targeted by harassment and defamation campaigns.

The narrative spread of course on social networks such as Twitter and Meta. It propagated both on traditional media, TV, radio and newspaper, as well as on new mediums such as newsletter and podcasts. However, to what extent, it broke the echo chamber of U.S. politics and law, the American platforms and researchers’ community remain unclear.

What is sure is that the actors used all kind of mediums to reach out to their targeted audiences and convey their theory to them.

Will it change the public opinion’s perception of state agencies’ work with platforms and erode trust towards democratic institutions?

You know that’s the one billion dollar question…

Your Press Corner

Here’s the weekly readings to keep you connected to all the conversation on global elections and information operations:

Surge in Russian Dark Web Posts About US Election Interference in 2024 (newsweek.com) - The company cautioned that the chatter did not mean that a cyberattack or foreign influence operation was being planned but suggested that improvements in artificial intelligence generated content could make disinformation easier to produce and more convincing.

Two Russians sanctioned by US for alleged disinformation campaign (therecord.media) - The sanctions name Ilya Andreevich Gambashidze and Nikolai Aleksandrovich Tupikin as the founders of two Russia-based companies that U.S. officials believe are involved in a “persistent foreign malign influence campaign at the direction of the Russian Presidential Administration.”

Attributing I-SOON: Private Contractor Linked to Multiple Chinese State-sponsored Groups (recordedfuture.com) - New Insikt Group Research provides updated insights on the recent i-SOON leak.

Deep fake AI services on Telegram pose risk for elections | Computer Weekly - Security analysts have identified more than 400 channels promoting deep fake services on the Telegram Messenger app, ranging from automated bots that help users create deep fake videos to individuals offering to create bespoke fake videos.

The Shortlist: Seven Ways Platforms Can Prepare for the U.S. 2024 Election (protectdemocracy.org) - Recommendations from the think tank Protect Democracy.

IFES Announces Voluntary Election Integrity Guidelines for Technology Companies | IFES - The International Foundation for Electoral Systems - These guidelines aim to help establish a framework for meaningful engagement and support between election authorities and technology companies.

Russian Presidential Campaign Hints at Renewed Support for War – Alliance For Securing Democracy (gmfus.org) - Analysis by the ASD, drawing upon their Election Monitoring Dashboard.

In Sisi’s Egypt 'laws aimed at curbing disinformation are instruments of political repression' | African Arguments - Two global disinformation experts discuss how the criminalisation of ‘fake news’ became an excuse for a clampdown on journalists and popular online commentators.

The United States of America and the Republic of Latvia sign Memorandum of Understanding to Expand Collaboration on Countering Foreign State Information Manipulation - United States Department of State - Latvia endorsed the US Framework on Countering Foreign State Information Manipulation.

Exclusive: Trump launched CIA covert influence operation against China | Reuters - President Donald Trump authorized the Central Intelligence Agency to launch a clandestine campaign on Chinese social media aimed at turning public opinion in China against its government, according to former U.S. officials with direct knowledge of the highly classified operation.

How Trump’s Allies Are Winning the War Over Disinformation - The New York Times (nytimes.com) - In case you missed it.

Meet the Arizona Election Official Combating Misinformation One Tweet at a Time | WIRED - Arizona Superman.

Existing Election Security Grants Can Help States Counter Election Disinformation through Voter Education - R Street Institute - The security grants emphasize countering rather than prohibiting disinformation and outlines one path that focuses on public education and distribution of accurate information about voting processes.

https://cyber.fsi.stanford.edu/io/news/ai-spam-accounts-build-followers - new research by two of the most prominent researchers in this field.

Fact-opinion differentiation | HKS Misinformation Review (harvard.edu) a new research which shows that affective partisan polarization promotes systematic partisan error: as views grow more polarized, partisans increasingly see their side as holding facts and the opposing side as holding opinions.

People trust themselves more than they trust the news. They shouldn’t. - Columbia Journalism Review (cjr.org) - There is a growing disconnect between how journalists see themselves and how people see journalists.

Twitter Use Related to Decreases in Well-Being, Increases in Political Polarization, Outrage, and Sense of Belonging | TechPolicy.Press - It’s not all bad news.

Election in Turkey

Quick update on Turkey’s upcoming local elections before we close this newsletter for today.

Local elections are planned for the 31st of March in Turkey. One of the major decisive battles for the Presidential Party is to regain the cities of Istanbul and Ankara, lost in 2019. Winning these big cities would give Erdogan the potential power to pursue a new constitution, and like his Russian or Chinese counterpart, hold on to power for the next decade.

The stakes are so high for President Erdogan, that it seems he has resumed using manipulative means to win the election. There are reports that a deepfake video has been circulating to encourage voting for AK Party’s candidate in Istanbul city.

Other videos could surface, as deepfakes have already been used in last year presidential election, without any moderation or removal. Combined with ongoing prosecutions against independent media in the country, hopes are low that the elections will be conducted free and fair.