Election Integrity in India: an American Dream Turning Into a Digital Nightmare

Indian Election Local Context - YouTube in the Spotlight - East Asian Actors in Microsoft Last Threat Report

Hey there.

This week, it is time to dive into the Indian online realm and its complexities to have some kind of understanding of what awaits us in the next Lok Sabha elections, which will take place between April 19 and June 1, 2024.

India is a key player in the competition between democracies and authoritarian states. 968 million people will vote in this election, and there are many issues at stake. Actually, too many. So, this week’s newsletter will only address the first part: the context and the domestic digital practices, while next week we will discuss the FIMI threats.

This will allow some space to present the latest Microsoft Threat Report that was published last week and was kind of exploited to send warning signals all over the globe. But is it really worth our attention?

What to be expected:

Indian Lok Sabha Election - Context

India will hold its general election from April 19 to June 1 to select 543 of 545 members of the Lok Sabha, the lower house of Parliament. 968 million Indians are eligible to vote in this election. The prime minister of the country will be then appointed by the President from the party or coalition that achieves a majority. Note: In India, the prime minister is the head of government with executive authority.

On one side, current Prime Minister Narendra Modi, who leads the Hindu nationalist Bharatiya Janata Party (BJP), which is part of the right-wing National Democratic Alliance currently ruling the country, is seeking a third term. On the other side, 24 opposition parties have formed a left-wing coalition, the Indian National Developmental Inclusive Alliance (INDIA).

In India, electronic voting is commonly used and replaced paper ballots since 2001. According to the Brookings Institution, electronic voting has contributed to improving India’s democracy over the years, both decreasing electoral fraud and increasing representativeness. However, they have been claims ahead of the election by opposition parties that the electronic voting system is not reliable and could contribute to rigging the election.

The successive phases of the elections, the bipartisan aspect, the sensitivity to electoral fraud narratives, these are all opportunities for threat actors to polarize and manipulate the national debate.

But that’s not all.

They are several domestic issues that could be leveraged before, during and after the election:

Unemployment, which has been increasing since Prime Minister Modi’s first term,

Religious divisions, as Hindu nationalists have been attacking the Muslim minority,

Decrease in freedom of the press and freedom of speech.

In the field of information and election integrity, the last issue is very concerning. According to Human Rights Watch, the Indian authorities increasingly control the digital platforms and collect massive amount of data on their users. As the parties campaign extensively through digital platforms, owning users’ data and controlling the digital platforms can largely support the ability of Indian authorities to influence what Indian users read and watch online.

There have been reports of internet shutdowns, a common tactic from the government to suppress dissent and control the online space. This has a negative effect, depriving Indian of access to reliable news, thus triggering rumors and misinformation.

The government also applies informal pressure to tech companies. Over the past years, tech companies such as X (formally Twitter) have been asked to take down content at the demand of the BJP. While tech companies may have tried to resist the pressure, the orders have continued to flow. In March 2023, X was ordered to restrict over 120 accounts in India, in an attempt to suppress speech from journalists, politicians and activists.

The government has also cracked down on the freedom of the press and freedom of expression, multiplying arrests and prosecutions. Harassment campaigns also target journalists and media. For instance, Mohammed Zubair, the co-founder of Alt News, an Indian fact-checking website, is regularly targeted by the Indian government and faces legal threats after accusations two years that he insulted a Hindi god in a tweet.

Indian Lok Sabha Election - Digital Practices

At every election, the Indian online space seems to be dominated by one major platform and its related challenges to prevent the spread of manipulated information and hoaxes. The 2019 election was dubbed the ‘WhatsApp election’ by French expert Tara Varma, currently vising fellow at the Brookings Institution. In her commentary, she indicated that WhatsApp became central to facilitating propaganda by the BJP, which created WhatsApp groups along caste or religion lines to incite hatred between the Hindu and the Muslim. It was part of the BJP polarization strategy.

This appears to remain true today, as AFP reports that Facebook and WhatsApp are being used to propagate hatred and incitement to violence against Muslims. According to Raqib Hameed Naik, from the research group Hindutva Watch, the BJP’s IT Cell is responsible for generating anger towards minorities.

The BJP’s IT Cell manages the social media campaigns for the party. It has proved over the years its ability to employ all of Meta’s platforms: Facebook, WhatsApp and Instagram to disseminate its narratives directly to Indians’ mobiles phones.

According to Shahana Sheikh, it relies on a network’s strategy: for example, in 2022 in Uttar Pradesh state, almost all functionaries of the BJP and the SP parties used smartphone apps for party purposes, especially WhatsApp and Facebook. They used WhatsApp to communicate with voters during their campaigns around forty to fifty times a day on average.

How will platforms monitor the debate this year?

Meta has announced its collaboration with the news agency Press Trust of India (PTI) to support and expand fact-checking programs in India. According to this report, the focus of the program is to address viral misinformation.

Meanwhile, X will have community notes, but concerns are already raised that they could be weaponized by the BJP’s IT cell.

While in 2024 TikTok is out of the game, YouTube has become the main source of scrutiny. A few days ago, the rights groups Global Witness and Access Now released a study to highlight the lack of enforcement from the platform on harmful content despite India representing YouTube’s largest market with 462 million users. Let’s dive into the study!

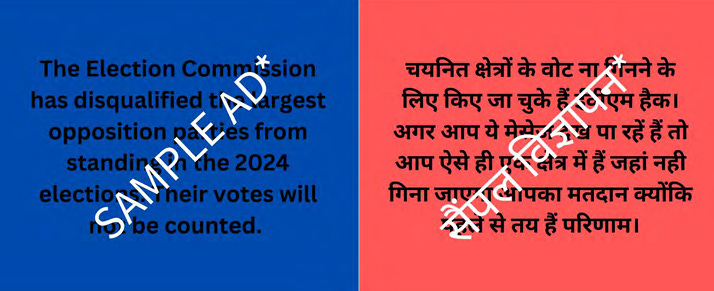

The rights group developed 16 short pieces of election disinformation content drawing upon existing narratives. More than half of the content was tailored to India’s context while the remainder was generic language related to elections. The content was translated from English to Hindu and Telugu.

The content was then transformed in short videos, totaling 48, which were submitted YouTube as ad creatives in February and March 2024. Three YouTube accounts were created to upload the videos and submit the ads, with a later publication date to prevent the videos from going live immediately. Within a day, YouTube approved all the ads for publication.

According to the study, YouTube failed to detect the ads that violated YouTube’s policy on election misinformation, as described in the Community Guidelines.

The rights group concludes the study by providing several recommendations to Google. Among them, we can find notably:

conducting a thorough evaluation of the advertising approval process and the election/political advertisements policy,

consulting with civil society, journalists, fact-checkers, and other stakeholders in a sustained manner to meaningfully incorporate feedback into policies,

making the Google Ads Transparency Centre an archive for all advertisements.

publishing detailed reports about how YouTube’s election misinformation policies are enforced.

conducting an investigation into the discrepancy in treatment of election misinformation content in countries such as India and Brazil compared to the US, identify what went wrong, and showing what steps are being taken to address and prevent this in the future.

This last recommendation echoes other warnings, from whistleblower Frances Haugen or from research centers such as, for instance, the Center for Democracy and Technology (CDT), which investigates content moderation in the Global South. According to the CDT, “the lack of resources and content moderation practices have inadvertently resulted in the suspension of user accounts and/or content, the supercharging of hate speech, and the proliferation of misleading content in the Global South”.

Following this report by the rights group, Google disagreed with the findings.

“Not one of these ads ever ran on our systems and this report does not show a lack of protections against election misinformation in India. Our policies explicitly prohibit ads making demonstrably false claims that could undermine participation or trust in an election, which we enforce in several Indian languages,” a Google spokesperson told Cybernews.

According to Google, the ads would have been caught in the subsequent stages of review. This first review was apparently done through automated systems that labeled the content as approved.

It is difficult to say what would have happened in the subsequent stages. But what is sure is that automation failed, which means that AI systems are not yet capable of detecting false, misleading, or harmful content. Human review will still be needed.

Your Press Corner

Here’s the weekly readings to keep you connected to all the conversation on global elections and information operations:

Anonymous accounts use right-wing channels to spread misinformation | AP News - The incident sheds light on how social media accounts that shield the identities of the people or groups behind them through clever slogans and cartoon avatars have come to dominate right-wing political discussion online even as they spread false information.

Jourová: US ‘calm’ about election manipulation threat – Euractiv - She added that she had spoken to several people in charge of US elections and noted a “certain level of calm.”

U.S., Finland Agree To Cooperate On Combating Foreign Disinformation (rferl.org) - and one new member added to the club!

EU and U.S. still dialogue on trade and technology (eunews.it) - Artificial intelligence, 6G, semiconductors, raw materials, respect for fundamental rights in the online sphere. At the sixth high-level TTC meeting in Leuven, the two partners took stock of work on key digital dossiers, while the Trump threat on Brussels-Washington relations looms on the horizon.

U.S-EU Joint Statement of the Trade and Technology Council | The White House - in case you are curious the full transcript.

RSF condemns police attacks and arrests of reporters covering post-election protests in Türkiye | RSF - At least eight journalists were attacked, three others were arrested and another was threatened for covering or referring to post-election protests in eastern Türkiye and Istanbul. These serious press freedom violations must stop, says Reporters Without Borders (RSF).

Information Manipulation: A Growing Threat In Pakistan (thefridaytimes.com) - The PTI turned to social media as a powerful weapon. They organized virtual rallies (jalsas) and creatively used AI-generated audios of Imran Khan to boost their campaign.

Network of Facebook pages pushes pro-Cha Cha narrative, claims “nothing to fear” in revisions (mindanews.com) - These FB pages are affiliated with Pilipinas Today that is managed by Sartine IT Solutions, which is linked to technology firm Breyalex.

'Mushroom Websites' Spread A Deluge Of Disinformation In Bulgaria (rferl.org) - When Poland recently hosted NATO military exercises, disinformation operatives sprang to action in Bulgaria, especially fertile ground for Kremlin-friendly narratives.

Francophobes in the Kremlin - EUvsDisinfo - Instead of sending love letters to the Élysée, the pro-Kremlin disinformation apparatus has taken France into its crosshairs by spreading lies and gross misogyny. Is Moscow afraid of something?

The rise of climate disinformation in Ghana | International Journalists' Network (ijnet.org) - In general, fact-checking organizations in Ghana should focus more on climate change disinformation and its negative impact on citizens and communities, said Gadugah, adding that their work also requires more financial support.

Foreign interference: how Parliament is fighting the threat to EU democracy | Topics | European Parliament (europa.eu) - Find out how the EU is fighting back.

Belgian research powerhouse turns hawkish on China – POLITICO - The European Union's top research hub is second-guessing some of its global collaboration in the face of rising realpolitik.

German AfD politician rejects Russian payment claims – DW – 04/04/2024 - Petr Bystron said he was a target of a "defamation campaign" following reports that he took money from a Russian disinformation site. Czech officials reportedly have audio recordings incriminating Bystron.

Failure to communicate: What Week 2 of the foreign interference inquiry revealed | CBC News - Meanwhile in Canada, reflections on past errors.

Why Brazil is Investigating Elon Musk for Disinformation - Bloomberg - In a court document, the top justice said the billionaire owner of X Corp. (formerly Twitter) “started a disinformation campaign” and his company is abusing its economic power to “illegally influence public opinion.”

Musk's X publishes fake news headline on Iran-Israel generated by its own AI chatbot - Truth or Fake (france24.com) - When verified users on X started sharing a fake news story, claiming that Iran attacked Israel, generated by the AI chatbot.

Meta (META) Downplays AI Disinformation Threat in US 2024 Elections - Bloomberg - AI not used on “systemic basis” to subvert elections according to Nick Clegg, Meta’s president of global affairs.

Don’t play it by ear: Audio deepfakes in a year of global elections | Lowy Institute - From robocalls to voice clones, generative AI is allowing malicious actors to spread misinformation with ease.

How Can AI Be Used to Build More Democratic Societies? (northeastern.edu) - In Taiwan, government officials are leveraging the power of AI by working with industry partners to create more collaborative and democratic projects, Tang explains.

How Tech Giants Cut Corners to Harvest Data for A.I. - The New York Times (nytimes.com) - OpenAI, Google and Meta ignored corporate policies, altered their own rules and discussed skirting copyright law as they sought online information to train their newest artificial intelligence systems.

Nine out of ten Brazilians admit to having believed fake news | Agência Brasil (ebc.com.br) - Regarding the content of the fake news they believed, 64 percent was about products being sold, 63 percent concerned proposals in electoral campaigns, 62 percent dealt with public policies—like vaccination—and 62 percent was about scandals involving politicians. There were also 57 percent who said they had believed untrue content about the economy and 51 percent in fake news involving public security and the prison system.

A campaign to highlight the role of the press in combating disinformation (brusselstimes.com) - With two months to go before the elections and with disinformation destabilising democracy, the publishers of the French- and German-language daily press, brought together under the umbrella of Lapresse.be, are launching a multimedia campaign.

Assessing Claims About Mail-In Voting and Electoral Fraud (aol.com) - Fact-checking the ‘electoral fraud’ narrative can only be a healthy countermeasure.

Mozilla suggests changes in WhatsApp to tackle poll disinformation (medianama.com) - The creator of Mozilla Firefox has published a statement stating that WhatsApp isn’t doing much to detect and stop “networked disinformation and hate speech” on its platform.

In Taiwan, a group is battling fake news one conversation at a time, with focus on seniors | The Associated Press (businessmirror.com.ph) - Nearly six years later, with just one formal employee and a team of volunteers, Fake News Cleaner has hosted more than 500 events, connecting with college students, elementary-school children—and the seniors that, some say, are the most vulnerable to such efforts.

A MAGA Troll’s Memes Were Blatant Disinfo. But Were They a Crime? (theintercept.com) - Looking at Douglass Mackey’s case and his posts about Hillary Clinton and the 2016 election as the U.S. Court of Appeals for the 2nd Circuit considered last April 5 whether Mackey’s conviction and sentence should stand.

Microsoft Threat Intelligence Report: Can the Past Predict the Future?

Last Thursday, Microsoft released its new threat intelligence report, which addresses East Asia cyber and informational threat actors’ tactics, techniques and procedures (TTPs).

What I immediately noticed was the flood of online news, using this report without necessarily mentioning Microsoft in the headline, to warn countries all over the globe that China will use AI to disrupt elections in the US, South Korea and India. It was really overwhelming, and probably made us miss other equally worthy news.

When I read the report, I was struck by the fact that Microsoft was summarizing previous recent investigations, not describing current ongoing campaigns targeting South Korea, India or the U.S. How did we go from describing the past to predicting the future with such assertiveness?

At the end of the report, there is this this small sentence “With major elections taking place around the world this year, particularly in India, South Korea and the United States, we assess that China will, at a minimum, create and amplify AI-generated content to benefit its interests.”.

I feel like there was a significant leap between describing past campaigns and making the assessment. Where are the other geopolitical, economic, security, cultural, historical, human, cognitive indicators? Is there any other type of intelligence, or do we just assume that past trends are reliable trends to foresee the future? I will leave my frustration here to dive into the findings.

What’s new? What are the main key findings?

Microsoft focuses on Storm-1376, also known as Spamouflage aka Dragonbridge, a Chinese threat actor. Sorry to interrupt again but when we will stop giving new names to already long-known threat actors? Spamouflage was reported in 2019 by Graphika. Fewer embellishments would certainly help more information-sharing. If every researcher and threat intelligence company comes up with a new name, we will flood ourselves with names to remember instead of focusing on what really matters.

What really matters?

According to Microsoft’s report, AI-generated content is increasingly created or amplified by the Chinese actor.

Side note: How do we know they identified which content was created and which content was amplified? Microsoft’s team did not give any explanations.

Examples mentioned by the report include:

Taiwan’s election day, when Spamouflage posted AI-generated audio clips of Foxconn owner Terry Gou, independent Party candidate in Taiwan’s presidential race, allegedly endorsing another candidate. What is interesting in this example, is that the GenAI audio followed a previous unsuccessful attempt to support this narrative through the dissemination of a fake letter supporting the same claim.

One hypothesis we can make here is that threat actors mayy perceive GenAI content as a secondary approach to take after their initial trial failed.

Taiwan and Myanmar’s audiences targeted since 2023 by AI-generated news anchors using the CapCut tool (ByteDance’s technology). According to Microsoft, this tactic has been increasingly used.

Another hypothesis we can draw is that the growth of this TTP can perhaps be linked to the ease with which the Chinese threat actor can utilize ByteDance’s technology. This could indicate that the threat actor has achieved satisfactory results through its usage.

Canadian members of parliament targeted by GenAI videos. These videos were part of a harassment campaign.

GenAI memes also targeted Taiwan DPP presidential candidate William Lai.

Side note: AI-generated content, according to the company, is not necessarily associated with audience engagement. Microsoft uses this very obscure phrasing” These campaigns achieved varying levels of resonance with no singular formula producing consistent audience engagement”. I guess it’s a way to check the AI box without having a clue how significant it is to talk about assessing AI in the context of information operations.

What is interesting to note is that Microsoft observed Spamouflage aka Dragonbridge aka Storm-1376 across 8 information operations, which span over 175 websites and 58 languages. This gives an appreciation of the size of this threat actor.

One common characteristic described is the more frequent use of conspiratorial narratives, especially during breaking news and geopolitical events. Examples mentioned in the report include:

False claims that a US government “weather weapon” started the Hawaii wildfires in August 2023. These claims were supported by GenAI images and amplified through cross-posting.

Casting doubt on the International Atomic Energy Agency (IAEA)’s scientific assessment regarding the safety of the release by Japan of treated radioactive wastewater into the Pacific Ocean on August 2023. It is interesting to note that other social assets such as Chinese state-media affiliated influencers and local protesters in South Korea were also mobilized to support and amplify the narrative.

False claims that the U.S. government may have caused the derailment of a train carrying molten sulfur in Rockcastle County, Kentucky.

These examples showcase that attacking trusted sources such as governments or agencies seem to be a recurring pattern of Spamouflage aka Dragonbridge aka Storm-1376.

Finally, Microsoft Threat Analysis Center also observed the increase of additional “sockpuppet accounts” that are assessed with moderate confidence to be run by the CCP. The center also observed that these accounts may have been created 10 years ago, suggesting that Chinese threat actors seek to acquire or re-purpose previous sets of inauthentic accounts.

What is particularly worth noting in this latter part of the report is the mention of the recurrent use of questions in the posted content targeting U.S. audiences. These accounts ask followers whether they agree with a given political topic. This could be both a way to connect and engage with the audience and a way to segment it. As we know that Chinese threat actors are particularly poor about creating engagement and leveraging domestic issues, if this TTP shows some results, it could be a game changer.

But I still can’t predict the future.